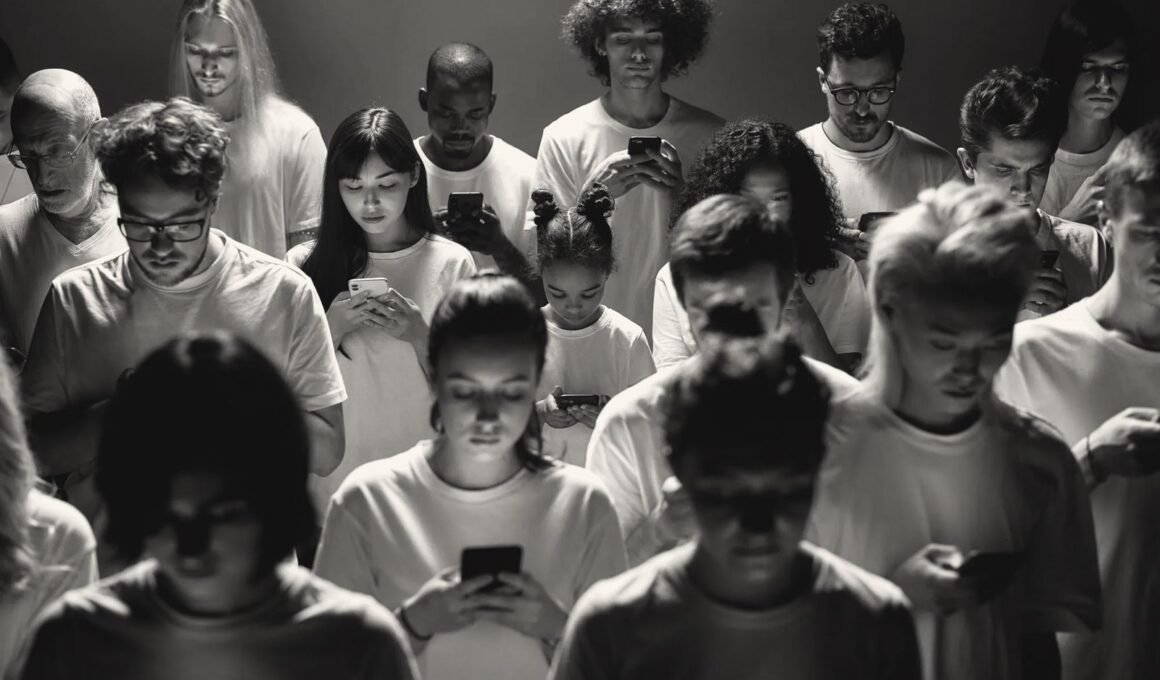

WEST POINT, NEW YORK — If you’ve ever wondered how that cookware ad happened across your internet browser window after you’d spent ten minutes searching for a turkey baster last Thanksgiving, the answer is that you – or more precisely, the devices you use to surf the net – have been microtargeted.

People’s search habits, social media post history, and even retail transaction details are among the many kinds of data up for sale in our cybernetic Elysian Fields, to which advertisers, hackers and political operatives can all gain access in order to sell us a coffee maker, extort money from us, or ostensibly change our vote in an election.

The solution, according to cyber-defense researchers, is the development of regulatory frameworks that can parse through the content and designate its appropriateness for mass consumption. A “Ministry of Truth,” so to speak, that can mitigate any disruptions to the status quo that might seep through in the Wild West of social media platforms.

The treasure trove of data currently being gathered through social media networks and other electronic means is a completely unregulated space, with microtargeting, in particular, spurring intense discussion in the wake of widely publicized allegations of Russian “interference” in the 2016 U.S. elections and the liberal use of data analytics, by Brexit promoters in the UK and the Trump campaign itself, to sway voters.

Hovering in the background of the simmering debate is the growing power of Facebook, Apple and other platform owners, whose monopolistic business practices are facing increasing push back around the world. Nevertheless, our content landlords still hold the key to the big-data realm by virtue of their dominant position, and whoever wants access to the new oil must kiss the ring of the Big Tech overlords.

As the Biden administration gets underway, an emphasis on cybersecurity as a matter of national security is solidifying. CIA Director nominee William Burns, on his last day of confirmation testimony this past Wednesday, told lawmakers that cyber threats “pose an ever greater risk to society” and promised to “relentlessly sharpen [the CIA’s] capabilities to understand how rivals use cyber and other technological tools, anticipate, detect and deter their use.” The Senate Intelligence Committee had previously approved Burns’ appointment in a unanimous closed-door vote on Tuesday, setting up a vote by the full Senate, where the career diplomat is expected to be confirmed.

Mitigating the “risk to society” Burns warns about is the focus of research scientists at the Army Cyber Institute (ACI), a military think tank established in 2012 at West Point Military Academy with a mandate to engage the Pentagon and federal agencies with “academic and industrial cyber communities” to “build intellectual capital … for the purpose of enabling effective army cyber defense.” A popular cybersecurity industry podcast called CyberWire brought on Maj. Jessica Dawson, Ph.D. from ACI to discuss her paper on microtargeting as a form of information warfare and the ideas floating around this relatively new military outfit regarding the mitigation of microtargeting’s ostensible threat to society.

A question of legitimacy

Right off the bat, Dawson admits that “we don’t actually know” what effect political microtargeting operations like those carried out by Cambridge Analytica in 2016 actually have and whether or not they really can serve as forms of “manipulation or mind control.” Nevertheless she believes it is a threat that should be taken seriously. She contends that the lack of regulatory oversight in the social media sphere leaves the door wide open to foreign and domestic influence campaigns that “pollute” what she defines as the “U.S. cognitive” domain.

“We are not really recognizing the way that this space can be weaponized,” says Dawson, drawing little distinction between “normal actors, who are just seeking to get a rise out of folks and go viral,” and “domestic actors who are seeking to use this space for power and possibly profit and … foreign actors who are seeking to erode the United States from within.”

Dawson — whose research interests on her ACI bio page include “morality, status, culture” and “moral change” — omits advertisers from the list of threatening actors, stating that microtargeting by “Pampers,” for example, is a totally benign use of data as a commodity that “nobody would probably freak out about.” Corporations that want to sell you a product are not, in her eyes, any kind of menace to society (as long as they are American). But, issues emerge when the product turns out to be an ideology and, specifically, a foreign ideology, according to Dawson, who stresses that content designed to “erode [social] cohesion” poses the gravest threat.

The cohesion argument is immediately framed by the researcher in terms of the Covid-19 mask protocols, which Dawson illustrates through a hypothetical scenario where “some random soldier decides not to mask and gets exposed, well now their whole squad has to go into quarantine.” She goes on to expand on the principle by pointing out that the “messaging” around the 2020 election, which cast a shadow of doubt over the results, can interfere with members of the armed forces “following the orders of the office of the President.”

Curiously, Dawson concedes that social media did not originate the questions over the legitimacy of the commander in chief, observing that such misgivings have been around since “Bush v. Gore.” Despite her candor, the effort to corral such expressions under a potential regulatory framework reveals the myopic nature of her approach to what is, in reality, a much more profound existential crisis of a nation that has already lost its legitimacy around the world.

The Ministry of Truth

One of the suggestions Dawson proposes for ways the government can “regulate who is allowed to advertise inside of the United States’ cognitive space,” is the creation of a federal agency that would credential advertisers who wish to promote their message on social media. Paying lip service to the inevitable questions about free speech that such an agency would elicit, Dawson grants that it is a “wicked problem to solve” — throwing in a sinophobic quip for good measure, assuring listeners that “we don’t want to start regulating everything using the artificial intelligence censors that, for example, China is rumored to be using.”

Dawson calls for a “national discussion” to figure out what the U.S. is going to “allow to be advertised” on social media, arguing that it is already done for “cigarettes and alcohol.” She also agrees, in principle, with the host’s suggestion of an FDA-like entity to make algorithms go through a testing and approval process before release.

As the interview was reaching its conclusion, the analogies being used to describe the problem of messaging on social platforms veered into puritanical territory, with certain types of social media content being likened to “pollution,” with potential solutions mirroring what was done through environmental legislation in the 1970s to limit the toxic waste giant corporations were releasing into the environment. Dawson wholeheartedly embraced this particular one as a “very, very good analogy” when it comes to the “pollution of the public sphere,” and added that putting it in terms of mental health is a “critical way of thinking about this.”

Lost in the conversation was the ability of regular people to use their own, individual critical thinking skills to sift through the content they may come across. For Dawson, Burns, and others in the growing cybersecurity industry, the “U.S. cognitive space” is a new theater of war that is not to be fought through education and open dialogue, but through hard and fast rules about what you can and cannot think about.